diff options

| author | Chris Akritidis <43294513+cakrit@users.noreply.github.com> | 2023-02-17 12:20:08 -0800 |

|---|---|---|

| committer | GitHub <noreply@github.com> | 2023-02-17 12:20:08 -0800 |

| commit | 1413b5bac327e8f90229361fbd9005aa0e139fa9 (patch) | |

| tree | a61dfb806f26187428dfdce85b6ae773b4aad483 /docs | |

| parent | 851ce5a184abd4f38377d826635848093f022f4f (diff) | |

Reorg learn 021723 (#14556)

* Change titles of agent alert notifications

* Reintroduce netdata for iot

* Eliminate guides category, merge health config docs

* Rename setup to configuration

* Codacy fixes and move health config reference

Diffstat (limited to 'docs')

29 files changed, 28 insertions, 1131 deletions

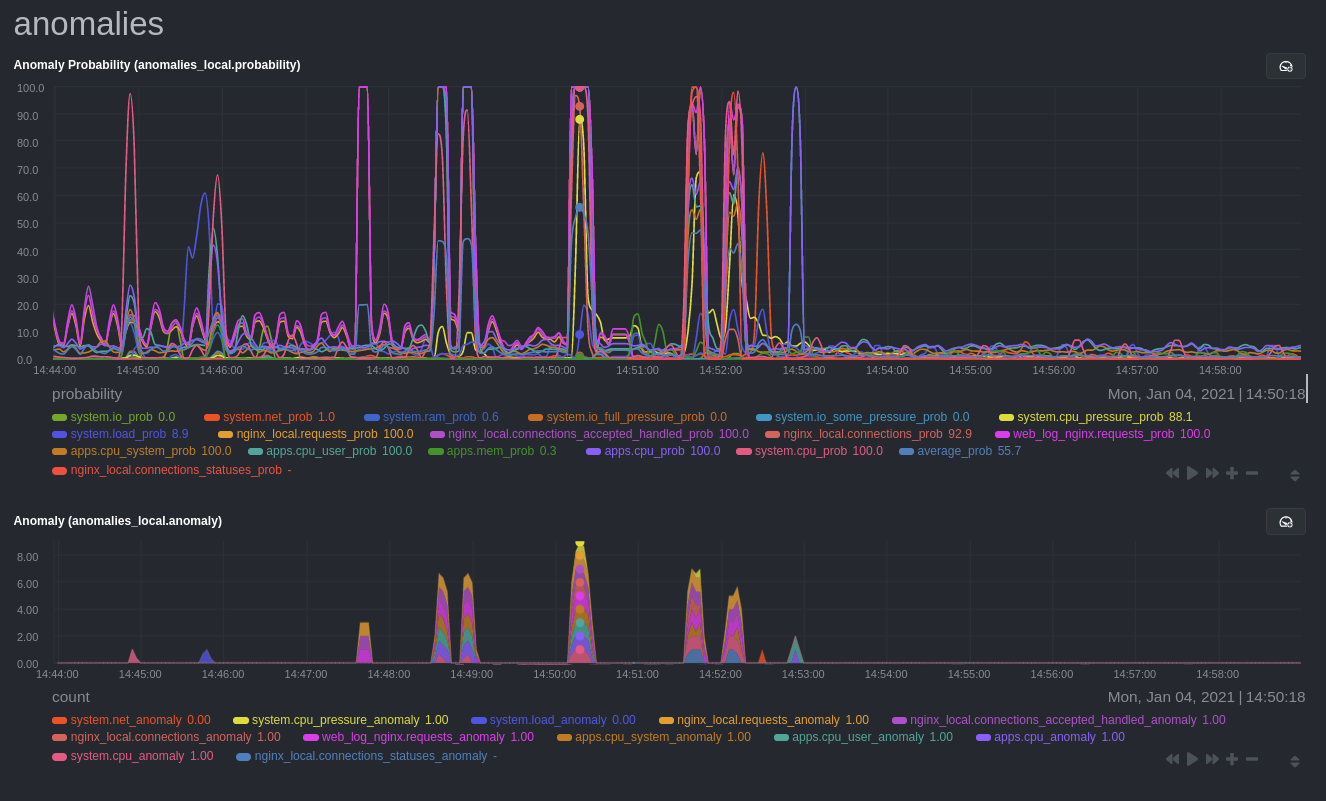

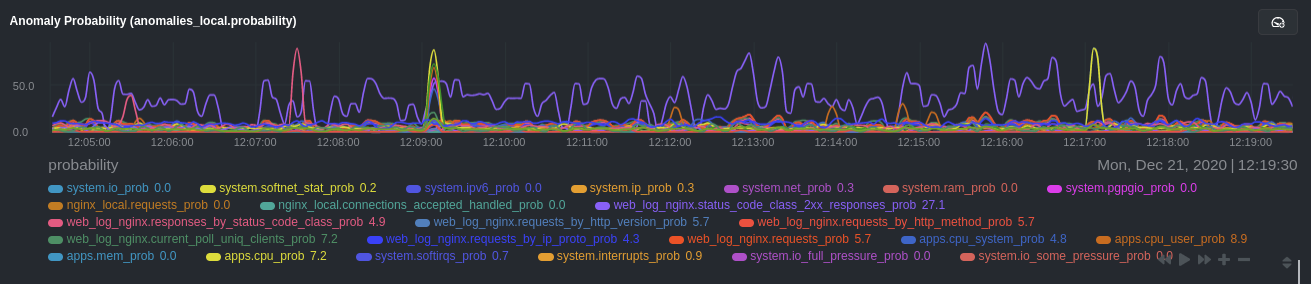

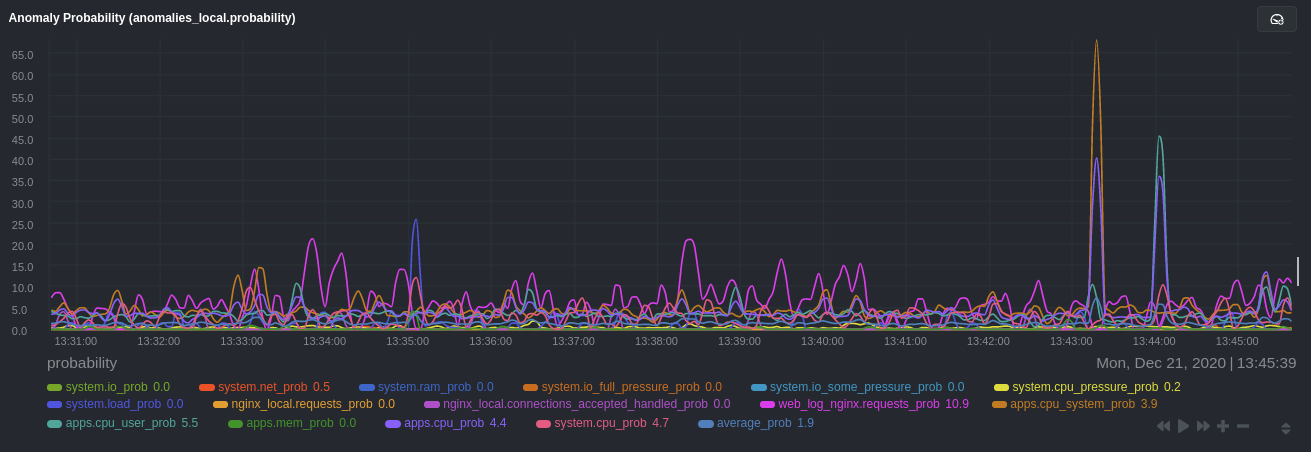

diff --git a/docs/cloud/insights/anomaly-advisor.md b/docs/cloud/insights/anomaly-advisor.md index 0e99522c4b..adce593a4d 100644 --- a/docs/cloud/insights/anomaly-advisor.md +++ b/docs/cloud/insights/anomaly-advisor.md @@ -32,8 +32,6 @@ To enable ML on your Netdata Agent, you need to edit the `[ml]` section in your At a minimum you just need to set `enabled = yes` to enable ML with default params. More details about configuration can be found in the [Netdata Agent ML docs](https://learn.netdata.cloud/docs/agent/ml#configuration). -**Note**: Follow [this guide](https://github.com/netdata/netdata/blob/master/docs/guides/step-by-step/step-04.md) if you are unfamiliar with making configuration changes in Netdata. - When you have finished your configuration, restart Netdata with a command like `sudo systemctl restart netdata` for the config changes to take effect. You can find more info on restarting Netdata [here](https://github.com/netdata/netdata/blob/master/docs/configure/start-stop-restart.md). After a brief delay, you should see the number of `trained` dimensions start to increase on the "dimensions" chart of the "Anomaly Detection" menu on the Overview page. By default the `minimum num samples to train = 3600` parameter means at least 1 hour of data is required to train initial models, but you could set this to `900` if you want to train initial models quicker but on less data. Over time, they will retrain on up to `maximum num samples to train = 14400` (4 hours by default), but you could increase this is you wanted to train on more data. diff --git a/docs/configure/common-changes.md b/docs/configure/common-changes.md index 933525f9a3..4a2ca7c68a 100644 --- a/docs/configure/common-changes.md +++ b/docs/configure/common-changes.md @@ -5,7 +5,7 @@ custom_edit_url: "https://github.com/netdata/netdata/edit/master/docs/configure/ sidebar_label: "Common configuration changes" learn_status: "Published" learn_topic_type: "Tasks" -learn_rel_path: "Setup" +learn_rel_path: "Configuration" --> # Common configuration changes diff --git a/docs/configure/nodes.md b/docs/configure/nodes.md index 0b6ebe6ab3..9ed1c8966e 100644 --- a/docs/configure/nodes.md +++ b/docs/configure/nodes.md @@ -2,10 +2,10 @@ title: "Configure the Netdata Agent" description: "Netdata is zero-configuration for most users, but complex infrastructures may require you to tweak some of the Agent's granular settings." custom_edit_url: "https://github.com/netdata/netdata/edit/master/docs/configure/nodes.md" -sidebar_label: "Setup" +sidebar_label: "Configuration" learn_status: "Published" learn_topic_type: "Tasks" -learn_rel_path: "Setup" +learn_rel_path: "Configuration" sidebar_position: 30 --> diff --git a/docs/configure/secure-nodes.md b/docs/configure/secure-nodes.md index c3d6a33e80..5381fcc273 100644 --- a/docs/configure/secure-nodes.md +++ b/docs/configure/secure-nodes.md @@ -5,7 +5,7 @@ custom_edit_url: "https://github.com/netdata/netdata/edit/master/docs/configure/ sidebar_label: "Secure your nodes" learn_status: "Published" learn_topic_type: "Tasks" -learn_rel_path: "Setup" +learn_rel_path: "Configuration" --> # Secure your nodes diff --git a/docs/export/enable-connector.md b/docs/export/enable-connector.md index 8efd61bde7..02e380e15d 100644 --- a/docs/export/enable-connector.md +++ b/docs/export/enable-connector.md @@ -5,7 +5,7 @@ custom_edit_url: "https://github.com/netdata/netdata/edit/master/docs/export/ena sidebar_label: "Enable an exporting connector" learn_status: "Published" learn_topic_type: "Tasks" -learn_rel_path: "Setup" +learn_rel_path: "Configuration" --> # Enable an exporting connector diff --git a/docs/guides/collect-apache-nginx-web-logs.md b/docs/guides/collect-apache-nginx-web-logs.md index 87137332c2..dc3afc045d 100644 --- a/docs/guides/collect-apache-nginx-web-logs.md +++ b/docs/guides/collect-apache-nginx-web-logs.md @@ -4,7 +4,7 @@ sidebar_label: "Monitor Nginx or Apache web server log files with Netdata" custom_edit_url: https://github.com/netdata/netdata/edit/master/docs/guides/collect-apache-nginx-web-logs.md learn_status: "Published" learn_topic_type: "Tasks" -learn_rel_path: "Guides" +learn_rel_path: "Miscellaneous" --> # Monitor Nginx or Apache web server log files with Netdata @@ -120,12 +120,5 @@ You can also edit this file directly with `edit-config`: ./edit-config health.d/weblog.conf ``` -For more information about editing the defaults or writing new alarm entities, see our [health monitoring -documentation](https://github.com/netdata/netdata/blob/master/health/README.md). - -## What's next? - -Now that you have web log collection up and running, we recommend you take a look at the collector's [documentation](https://github.com/netdata/go.d.plugin/blob/master/modules/weblog/README.md) for some ideas of how you can turn these rather "boring" logs into powerful real-time tools for keeping your servers happy. - -Don't forget to give GitHub user [Wing924](https://github.com/Wing924) a big 👍 for his hard work in starting up the Go -refactoring effort. +For more information about editing the defaults or writing new alarm entities, see our +[health monitoring documentation](https://github.com/netdata/netdata/blob/master/health/README.md). diff --git a/docs/guides/collect-unbound-metrics.md b/docs/guides/collect-unbound-metrics.md index e033af5cb5..c5f4deb518 100644 --- a/docs/guides/collect-unbound-metrics.md +++ b/docs/guides/collect-unbound-metrics.md @@ -5,7 +5,7 @@ date: 2020-03-31 custom_edit_url: https://github.com/netdata/netdata/edit/master/docs/guides/collect-unbound-metrics.md learn_status: "Published" learn_topic_type: "Tasks" -learn_rel_path: "Guides" +learn_rel_path: "Miscellaneous" --> # Monitor Unbound DNS servers with Netdata diff --git a/docs/guides/configure/performance.md b/docs/guides/configure/performance.md index 820e8f5982..9782f9af68 100644 --- a/docs/guides/configure/performance.md +++ b/docs/guides/configure/performance.md @@ -6,7 +6,7 @@ image: /img/seo/guides/configure/performance.png custom_edit_url: https://github.com/netdata/netdata/edit/master/docs/guides/configure/performance.md learn_status: "Published" learn_topic_type: "Tasks" -learn_rel_path: "Guides" +learn_rel_path: "Configuration" --> # How to optimize the Netdata Agent's performance diff --git a/docs/guides/longer-metrics-storage.md b/docs/guides/longer-metrics-storage.md deleted file mode 100644 index bea3b8a51f..0000000000 --- a/docs/guides/longer-metrics-storage.md +++ /dev/null @@ -1,162 +0,0 @@ -<!-- -title: "Netdata Longer Metrics Retention" -sidebar_label: "Netdata Longer Metrics Retention" -description: "" -custom_edit_url: https://github.com/netdata/netdata/edit/master/docs/guides/longer-metrics-storage.md -learn_status: "Published" -learn_topic_type: "Tasks" -learn_rel_path: "Guides" ---> - -# Netdata Longer Metrics Retention - -Metrics retention affects 3 parameters on the operation of a Netdata Agent: - -1. The disk space required to store the metrics. -2. The memory the Netdata Agent will require to have that retention available for queries. -3. The CPU resources that will be required to query longer time-frames. - -As retention increases, the resources required to support that retention increase too. - -Since Netdata Agents usually run at the edge, inside production systems, Netdata Agent **parents** should be considered. When having a **parent - child** setup, the child (the Netdata Agent running on a production system) delegates all its functions, including longer metrics retention and querying, to the parent node that can dedicate more resources to this task. A single Netdata Agent parent can centralize multiple children Netdata Agents (dozens, hundreds, or even thousands depending on its available resources). - - -## Ephemerality of metrics - -The ephemerality of metrics plays an important role in retention. In environments where metrics stop being collected and new metrics are constantly being generated, we are interested about 2 parameters: - -1. The **expected concurrent number of metrics** as an average for the lifetime of the database. - This affects mainly the storage requirements. - -2. The **expected total number of unique metrics** for the lifetime of the database. - This affects mainly the memory requirements for having all these metrics indexed and available to be queried. - -## Granularity of metrics - -The granularity of metrics (the frequency they are collected and stored, i.e. their resolution) is significantly affecting retention. - -Lowering the granularity from per second to every two seconds, will double their retention and half the CPU requirements of the Netdata Agent, without affecting disk space or memory requirements. - -## Which database mode to use - -Netdata Agents support multiple database modes. - -The default mode `[db].mode = dbengine` has been designed to scale for longer retentions. - -The other available database modes are designed to minimize resource utilization and should usually be considered on **parent - child** setups at the children side. - -So, - -* On a single node setup, use `[db].mode = dbengine` to increase retention. -* On a **parent - child** setup, use `[db].mode = dbengine` on the parent to increase retention and a more resource efficient mode (like `save`, `ram` or `none`) for the child to minimize resources utilization. - -To use `dbengine`, set this in `netdata.conf` (it is the default): - -``` -[db] - mode = dbengine -``` - -## Tiering - -`dbengine` supports tiering. Tiering allows having up to 3 versions of the data: - -1. Tier 0 is the high resolution data. -2. Tier 1 is the first tier that samples data every 60 data collections of Tier 0. -3. Tier 2 is the second tier that samples data every 3600 data collections of Tier 0 (60 of Tier 1). - -To enable tiering set `[db].storage tiers` in `netdata.conf` (the default is 1, to enable only Tier 0): - -``` -[db] - mode = dbengine - storage tiers = 3 -``` - -## Disk space requirements - -Netdata Agents require about 1 bytes on disk per database point on Tier 0 and 4 times more on higher tiers (Tier 1 and 2). They require 4 times more storage per point compared to Tier 0, because for every point higher tiers store `min`, `max`, `sum`, `count` and `anomaly rate` (the values are 5, but they require 4 times the storage because `count` and `anomaly rate` are 16-bit integers). The `average` is calculated on the fly at query time using `sum / count`. - -### Tier 0 - per second for a week - -For 2000 metrics, collected every second and retained for a week, Tier 0 needs: 1 byte x 2000 metrics x 3600 secs per hour x 24 hours per day x 7 days per week = 1100MB. - -The setting to control this is in `netdata.conf`: - -``` -[db] - mode = dbengine - - # per second data collection - update every = 1 - - # enable only Tier 0 - storage tiers = 1 - - # Tier 0, per second data for a week - dbengine multihost disk space MB = 1100 -``` - -By setting it to `1100` and restarting the Netdata Agent, this node will start maintaining about a week of data. But pay attention to the number of metrics. If you have more than 2000 metrics on a node, or you need more that a week of high resolution metrics, you may need to adjust this setting accordingly. - -### Tier 1 - per minute for a month - -Tier 1 is by default sampling the data every 60 points of Tier 0. If Tier 0 is per second, then Tier 1 is per minute. - -Tier 1 needs 4 times more storage per point compared to Tier 0. So, for 2000 metrics, with per minute resolution, retained for a month, Tier 1 needs: 4 bytes x 2000 metrics x 60 minutes per hour x 24 hours per day x 30 days per month = 330MB. - -Do this in `netdata.conf`: - -``` -[db] - mode = dbengine - - # per second data collection - update every = 1 - - # enable only Tier 0 and Tier 1 - storage tiers = 2 - - # Tier 0, per second data for a week - dbengine multihost disk space MB = 1100 - - # Tier 1, per minute data for a month - dbengine tier 1 multihost disk space MB = 330 -``` - -Once `netdata.conf` is edited, the Netdata Agent needs to be restarted for the changes to take effect. - -### Tier 2 - per hour for a year - -Tier 2 is by default sampling data every 3600 points of Tier 0 (60 of Tier 1). If Tier 0 is per second, then Tier 2 is per hour. - -The storage requirements are the same to Tier 1. - -For 2000 metrics, with per hour resolution, retained for a year, Tier 2 needs: 4 bytes x 2000 metrics x 24 hours per day x 365 days per year = 67MB. - -Do this in `netdata.conf`: - -``` -[db] - mode = dbengine - - # per second data collection - update every = 1 - - # enable only Tier 0 and Tier 1 - storage tiers = 3 - - # Tier 0, per second data for a week - dbengine multihost disk space MB = 1100 - - # Tier 1, per minute data for a month - dbengine tier 1 multihost disk space MB = 330 - - # Tier 2, per hour data for a year - dbengine tier 2 multihost disk space MB = 67 -``` - -Once `netdata.conf` is edited, the Netdata Agent needs to be restarted for the changes to take effect. - - - diff --git a/docs/guides/monitor-cockroachdb.md b/docs/guides/monitor-cockroachdb.md index bc3c47f939..0d5397c0c4 100644 --- a/docs/guides/monitor-cockroachdb.md +++ b/docs/guides/monitor-cockroachdb.md @@ -4,7 +4,7 @@ sidebar_label: "Monitor CockroachDB metrics with Netdata" custom_edit_url: https://github.com/netdata/netdata/edit/master/docs/guides/monitor-cockroachdb.md learn_status: "Published" learn_topic_type: "Tasks" -learn_rel_path: "Guides" +learn_rel_path: "Miscellaneous" --> # Monitor CockroachDB metrics with Netdata @@ -29,7 +29,6 @@ Let's dive in and walk through the process of monitoring CockroachDB metrics wit - [Configure the CockroachDB collector](#configure-the-cockroachdb-collector) - [Manual setup for a local CockroachDB database](#manual-setup-for-a-local-cockroachdb-database) - [Tweak CockroachDB alarms](#tweak-cockroachdb-alarms) - - [What's next?](#whats-next) ## Configure the CockroachDB collector @@ -117,23 +116,3 @@ cd /etc/netdata/ # Replace with your Netdata configuration directory, if not /et ``` For more information about editing the defaults or writing new alarm entities, see our documentation on [configuring health alarms](https://github.com/netdata/netdata/blob/master/docs/monitor/configure-alarms.md). - -## What's next? - -Now that you're collecting metrics from your CockroachDB databases, let us know how it's working for you! There's always -room for improvement or refinement based on real-world use cases. Feel free to [file an -issue](https://github.com/netdata/netdata/issues/new?assignees=&labels=bug%2Cneeds+triage&template=BUG_REPORT.yml) with -your -thoughts. - -Also, be sure to check out these useful resources: - -- [Netdata's CockroachDB documentation](https://github.com/netdata/go.d.plugin/blob/master/modules/cockroachdb/README.md) -- [Netdata's CockroachDB configuration](https://github.com/netdata/go.d.plugin/blob/master/config/go.d/cockroachdb.conf) -- [Netdata's CockroachDB alarms](https://github.com/netdata/netdata/blob/29d9b5e51603792ee27ef5a21f1de0ba8e130158/health/health.d/cockroachdb.conf) -- [CockroachDB homepage](https://www.cockroachlabs.com/product/) -- [CockroachDB documentation](https://www.cockroachlabs.com/docs/stable/) -- [`_status/vars` endpoint docs](https://www.cockroachlabs.com/docs/stable/monitoring-and-alerting.html#prometheus-endpoint) -- [Monitor CockroachDB with Prometheus](https://www.cockroachlabs.com/docs/stable/monitor-cockroachdb-with-prometheus.html) - - diff --git a/docs/guides/monitor-hadoop-cluster.md b/docs/guides/monitor-hadoop-cluster.md index 0fb3b7c407..91282b9559 100644 --- a/docs/guides/monitor-hadoop-cluster.md +++ b/docs/guides/monitor-hadoop-cluster.md @@ -4,7 +4,7 @@ sidebar_label: "Monitor a Hadoop cluster with Netdata" custom_edit_url: https://github.com/netdata/netdata/edit/master/docs/guides/monitor-hadoop-cluster.md learn_status: "Published" learn_topic_type: "Tasks" -learn_rel_path: "Guides" +learn_rel_path: "Miscellaneous" --> # Monitor a Hadoop cluster with Netdata @@ -188,20 +188,5 @@ sudo /etc/netdata/edit-config health.d/hdfs.conf sudo /etc/netdata/edit-config health.d/zookeeper.conf ``` -For more information about editing the defaults or writing new alarm entities, see our [health monitoring -documentation](https://github.com/netdata/netdata/blob/master/health/README.md). - -## What's next? - -If you're having issues with Netdata auto-detecting your HDFS/Zookeeper servers, or want to help improve how Netdata -collects or presents metrics from these services, feel free to [file an -issue](https://github.com/netdata/netdata/issues/new?assignees=&labels=bug%2Cneeds+triage&template=BUG_REPORT.yml). - -- Read up on the [HDFS configuration - file](https://github.com/netdata/go.d.plugin/blob/master/config/go.d/hdfs.conf) to understand how to configure - global options or per-job options, such as username/password, TLS certificates, timeouts, and more. -- Read up on the [Zookeeper configuration - file](https://github.com/netdata/go.d.plugin/blob/master/config/go.d/zookeeper.conf) to understand how to configure - global options or per-job options, timeouts, TLS certificates, and more. - - +For more information about editing the defaults or writing new alarm entities, see our +[health monitoring documentation](https://github.com/netdata/netdata/blob/master/health/README.md). diff --git a/docs/guides/monitor/anomaly-detection-python.md b/docs/guides/monitor/anomaly-detection-python.md deleted file mode 100644 index 8e62019201..0000000000 --- a/docs/guides/monitor/anomaly-detection-python.md +++ /dev/null @@ -1,193 +0,0 @@ -<!-- -title: "Detect anomalies in systems and applications" -sidebar_label: "Detect anomalies in systems and applications" -description: "Detect anomalies in any system, container, or application in your infrastructure with machine learning and the open-source Netdata Agent." -image: /img/seo/guides/monitor/anomaly-detection.png -author: "Joel Hans" -author_title: "Editorial Director, Technical & Educational Resources" -author_img: "/img/authors/joel-hans.jpg" -custom_edit_url: https://github.com/netdata/netdata/edit/master/docs/guides/monitor/anomaly-detection-python.md -learn_status: "Published" -learn_topic_type: "Tasks" -learn_rel_path: "Guides/Monitor" ---> - -# Detect anomalies in systems and applications - -Beginning with v1.27, the [open-source Netdata Agent](https://github.com/netdata/netdata) is capable of unsupervised -[anomaly detection](https://en.wikipedia.org/wiki/Anomaly_detection) with machine learning (ML). As with all things -Netdata, the anomalies collector comes with preconfigured alarms and instant visualizations that require no query -languages or organizing metrics. You configure the collector to look at specific charts, and it handles the rest. - -Netdata's implementation uses a handful of functions in the [Python Outlier Detection (PyOD) -library](https://github.com/yzhao062/pyod/tree/master), which periodically runs a `train` function that learns what -"normal" looks like on your node and creates an ML model for each chart, then utilizes the -[`predict_proba()`](https://pyod.readthedocs.io/en/latest/api_cc.html#pyod.models.base.BaseDetector.predict_proba) and -[`predict()`](https://pyod.readthedocs.io/en/latest/api_cc.html#pyod.models.base.BaseDetector.predict) PyOD functions to -quantify how anomalous certain charts are. - -All these metrics and alarms are available for centralized monitoring in [Netdata Cloud](https://app.netdata.cloud). If -you choose to sign up for Netdata Cloud and [connect your nodes](https://github.com/netdata/netdata/blob/master/claim/README.md), you will have the ability to run -tailored anomaly detection on every node in your infrastructure, regardless of its purpose or workload. - -In this guide, you'll learn how to set up the anomalies collector to instantly detect anomalies in an Nginx web server -and/or the node that hosts it, which will give you the tools to configure parallel unsupervised monitors for any -application in your infrastructure. Let's get started. - - - -## Prerequisites - -- A node running the Netdata Agent. If you don't yet have that, [install Netdata](https://github.com/netdata/netdata/blob/master/packaging/installer/README.md). -- A Netdata Cloud account. [Sign up](https://app.netdata.cloud) if you don't have one already. -- Familiarity with configuring the Netdata Agent with [`edit-config`](https://github.com/netdata/netdata/blob/master/docs/configure/nodes.md). -- _Optional_: An Nginx web server running on the same node to follow the example configuration steps. - -## Install required Python packages - -The anomalies collector uses a few Python packages, available with `pip3`, to run ML training. It requires -[`numba`](http://numba.pydata.org/), [`scikit-learn`](https://scikit-learn.org/stable/), -[`pyod`](https://pyod.readthedocs.io/en/latest/), in addition to -[`netdata-pandas`](https://github.com/netdata/netdata-pandas), which is a package built by the Netdata team to pull data -from a Netdata Agent's API into a [Pandas](https://pandas.pydata.org/). Read more about `netdata-pandas` on its [package -repo](https://github.com/netdata/netdata-pandas) or in Netdata's [community -repo](https://github.com/netdata/community/tree/main/netdata-agent-api/netdata-pandas). - -```bash -# Become the netdata user -sudo su -s /bin/bash netdata - -# Install required packages for the netdata user -pip3 install --user netdata-pandas==0.0.38 numba==0.50.1 scikit-learn==0.23.2 pyod==0.8.3 -``` - -> If the `pip3` command fails, you need to install it. For example, on an Ubuntu system, use `sudo apt install -> python3-pip`. - -Use `exit` to become your normal user again. - -## Enable the anomalies collector - -Navigate to your [Netdata config directory](https://github.com/netdata/netdata/blob/master/docs/configure/nodes.md#the-netdata-config-directory) and use `edit-config` -to open the `python.d.conf` file. - -```bash -sudo ./edit-config python.d.conf -``` - -In `python.d.conf` file, search for the `anomalies` line. If the line exists, set the value to `yes`. Add the line -yourself if it doesn't already exist. Either way, the final result should look like: - -```conf -anomalies: yes -``` - -[Restart the Agent](https://github.com/netdata/netdata/blob/master/docs/configure/start-stop-restart.md) with `sudo systemctl restart netdata`, or the [appropriate -method](https://github.com/netdata/netdata/blob/master/docs/configure/start-stop-restart.md) for your system, to start up the anomalies collector. By default, the -model training process runs every 30 minutes, and uses the previous 4 hours of metrics to establish a baseline for -health and performance across the default included charts. - -> 💡 The anomaly collector may need 30-60 seconds to finish its initial training and have enough data to start -> generating anomaly scores. You may need to refresh your browser tab for the **Anomalies** section to appear in menus -> on both the local Agent dashboard or Netdata Cloud. - -## Configure the anomalies collector - -Open `python.d/anomalies.conf` with `edit-conf`. - -```bash -sudo ./edit-config python.d/anomalies.conf -``` - -The file contains many user-configurable settings with sane defaults. Here are some important settings that don't -involve tweaking the behavior of the ML training itself. - -- `charts_regex`: Which charts to train models for and run anomaly detection on, with each chart getting a separate - model. -- `charts_to_exclude`: Specific charts, selected by the regex in `charts_regex`, to exclude. -- `train_every_n`: How often to train the ML models. -- `train_n_secs`: The number of historical observations to train each model on. The default is 4 hours, but if your node - doesn't have historical metrics going back that far, consider [changing the metrics retention - policy](https://github.com/netdata/netdata/blob/master/docs/store/change-metrics-storage.md) or reducing this window. -- `custom_models`: A way to define custom models that you want anomaly probabilities for, including multi-node or - streaming setups. - -> ⚠️ Setting `charts_regex` with many charts or `train_n_secs` to a very large number will have an impact on the -> resources and time required to train a model for every chart. The actual performance implications depend on the -> resources available on your node. If you plan on changing these settings beyond the default, or what's mentioned in -> this guide, make incremental changes to observe the performance impact. Considering `train_max_n` to cap the number of -> observations actually used to train on. - -### Run anomaly detection on Nginx and log file metrics - -As mentioned above, this guide uses an Nginx web server to demonstrate how the anomalies collector works. You must -configure the collector to monitor charts from the -[Nginx](https://github.com/netdata/go.d.plugin/blob/master/modules/nginx/README.md) and [web -log](https://github.com/netdata/go.d.plugin/blob/master/modules/weblog/README.md) collectors. - -`charts_regex` allows for some basic regex, such as wildcards (`*`) to match all contexts with a certain pattern. For -example, `system\..*` matches with any chart with a context that begins with `system.`, and ends in any number of other -characters (`.*`). Note the escape character (`\`) around the first period to capture a period character exactly, and -not any character. - -Change `charts_regex` in `anomalies.conf` to the following: - -```conf - charts_regex: 'system\..*|nginx_local\..*|web_log_nginx\..*|apps.cpu|apps.mem' -``` - -This value tells the anomaly collector to train against every `system.` chart, every `nginx_local` chart, every -`web_log_nginx` chart, and specifically the `apps.cpu` and `apps.mem` charts. - - - -### Remove some metrics from anomaly detection - -As you can see in the above screenshot, this node is now looking for anomalies in many places. The result is a single -`anomalies_local.probability` chart with more than twenty dimensions, some of which the dashboard hides at the bottom of -a scrollable area. In addition, training and analyzing the anomaly collector on many charts might require more CPU -utilization that you're willing to give. - -First, explicitly declare which `system.` charts to monitor rather than of all of them using regex (`system\..*`). - -```conf - charts_regex: 'system\.cpu|system\.load|system\.io|system\.net|system\.ram|nginx_local\..*|web_log_nginx\..*|apps.cpu|apps.mem' -``` - -Next, remove some charts with the `charts_to_exclude` setting. For this example, using an Nginx web server, focus on the -volume of requests/responses, not, for example, which type of 4xx response a user might receive. - -```conf - charts_to_exclude: 'web_log_nginx.excluded_requests,web_log_nginx.responses_by_status_code_class,web_log_nginx.status_code_class_2xx_responses,web_log_nginx.status_code_class_4xx_responses,web_log_nginx.current_poll_uniq_clients,web_log_nginx.requests_by_http_method,web_log_nginx.requests_by_http_version,web_log_nginx.requests_by_ip_proto' -``` - - - -Apply the ideas behind the collector's regex and exclude settings to any other -[system](https://github.com/netdata/netdata/blob/master/docs/collect/system-metrics.md), [container](https://github.com/netdata/netdata/blob/master/docs/collect/container-metrics.md), or -[application](https://github.com/netdata/netdata/blob/master/docs/collect/application-metrics.md) metrics you want to detect anomalies for. - -## What's next? - -Now that you know how to set up unsupervised anomaly detection in the Netdata Agent, using an Nginx web server as an -example, it's time to apply that knowledge to other mission-critical parts of your infrastructure. If you're not sure -what to monitor next, check out our list of [collectors](https://github.com/netdata/netdata/blob/master/collectors/COLLECTORS.md) to see what kind of metrics Netdata -can collect from your systems, containers, and applications. - -Keep on moving to [part 2](https://github.com/netdata/netdata/blob/master/docs/guides/monitor/visualize-monitor-anomalies.md), which covers the charts and alarms -Netdata creates for unsupervised anomaly detection. - -For a differen |